By Dr Darina Slattery.

Reading Time: ~5 minutes

Featured Image Source: Photo by Lukas from Pexels

In this post:

- Introduction

- 1. Using VLE Analytics to Measure Student Engagement

- 2. Using Forum Interactions to Assess Online Participation

- 3. Using Course Quality Review Rubrics

- Conclusions

- References/Further Reading

Introduction

There are a number of ways to evaluate your online module. For example, you can use VLE analytics, look for granularity in forum postings, and/ or use a course quality review rubric. This article provides an overview of how you can use these three strategies to evaluate what worked—and what did not work—in your blended or fully online module.

1. Using VLE Analytics to Measure Student Engagement

All VLEs gather data from users and can generate analytics reports to help you measure student engagement. As Sulis (Sakai) is the UL-supported VLE, the remainder of this section will focus on Sulis analytics, but you will find similar data in other VLEs.

In Sulis, if you click on the ‘Statistics’ tool on the left (you may have to enable it under ‘Site Info > Manage Tools’), you will be presented with three tabs: Overview, Reports, and Preferences.

On the ‘Overview’ tab, you can view data relating to:

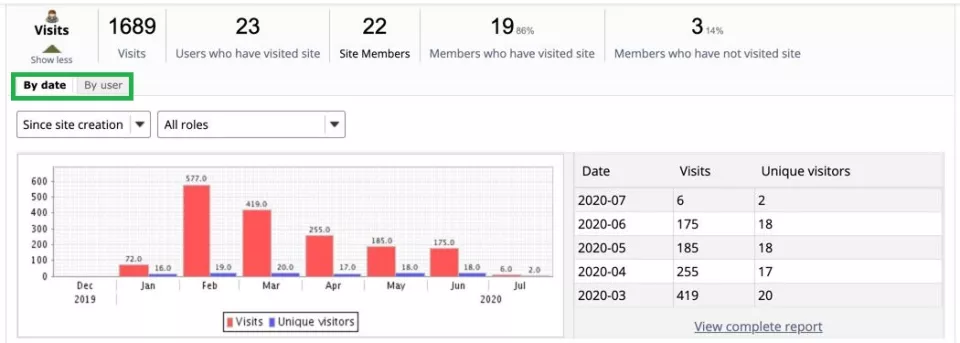

a) Visits: This area will tell you how many visits, how many unique visits, and who has/ hasn’t yet visited your module site. You can examine visits ‘by date’ or ‘by user’ by clicking on the relevant tab under ‘Visits’ (see Figure 1).

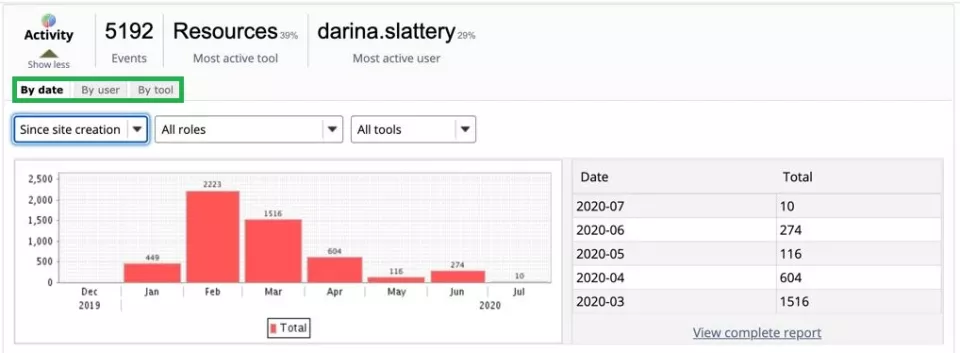

b) Activity: This area will tell you which tool is the most active tool, who is the most active user, and peak usage times. You can view activity by date, by user, or by tool (see Figure 2).

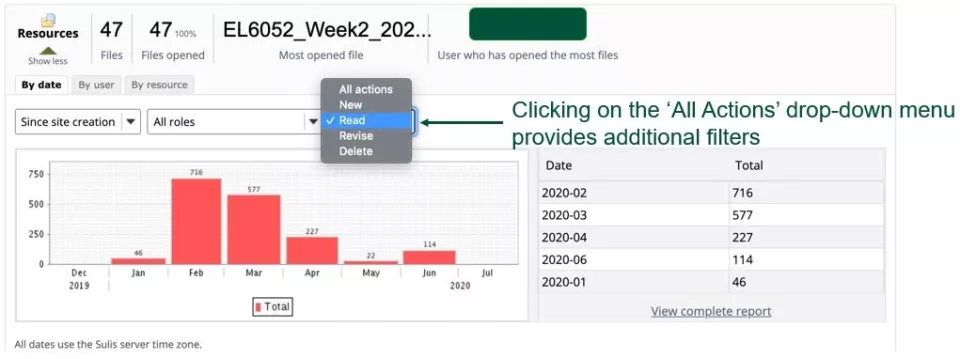

c) Resources: This area will tell you the % of files opened, the most opened file, and who has opened the most files. It can also tell you who has read, revised, deleted, and created new resources (see Figure 3).

d) Lesson pages: This area will tell you the % of lessons pages read, the most read lessons page, and who has read the most lessons pages.

In addition to the ‘Overview’ tab, you can use the ‘Reports’ tab to create customisable reports if you are interested in specific types of activities, users, or files, and you can use the ‘Preferences’ tab to determine which tools and activities should be included in the statistics and reports.

This article only highlights a selection of analytics that are available, so take the time to browse through the ‘Statistics’ tool when you get a chance. You might be surprised at the data that is readily available to you!

2. Using Forum Interactions to Assess Online Participation

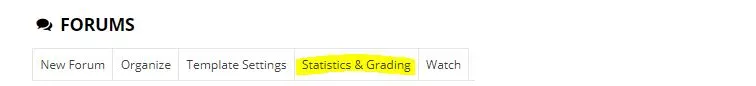

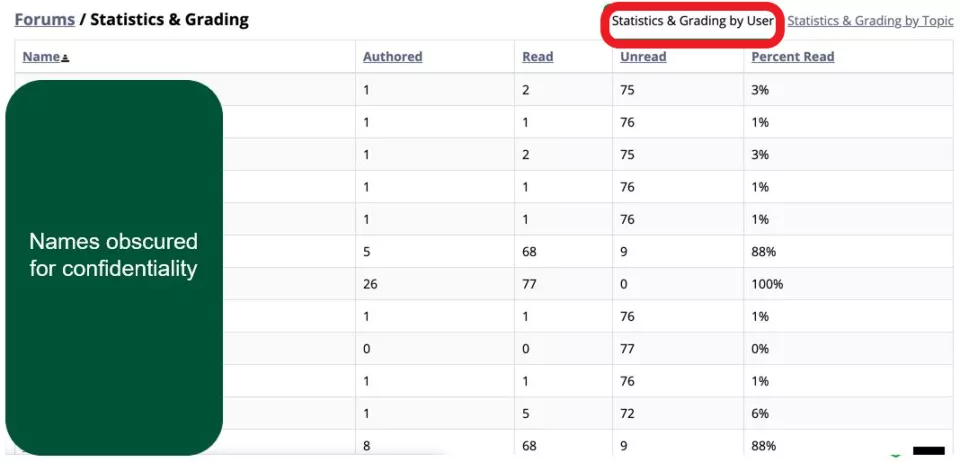

If you use the Sulis ‘Forums’ tool, there are additional built-in statistics that you can use to assess online participation. To access these statistics, click on the ‘Statistics & Grading’ tab within the ‘Forums’ tool (see Figure 4).

Within this area, you can view how many messages were authored by each student, how many postings they read, how many they didn’t read, and the percentage of messages they read (see Figure 5).

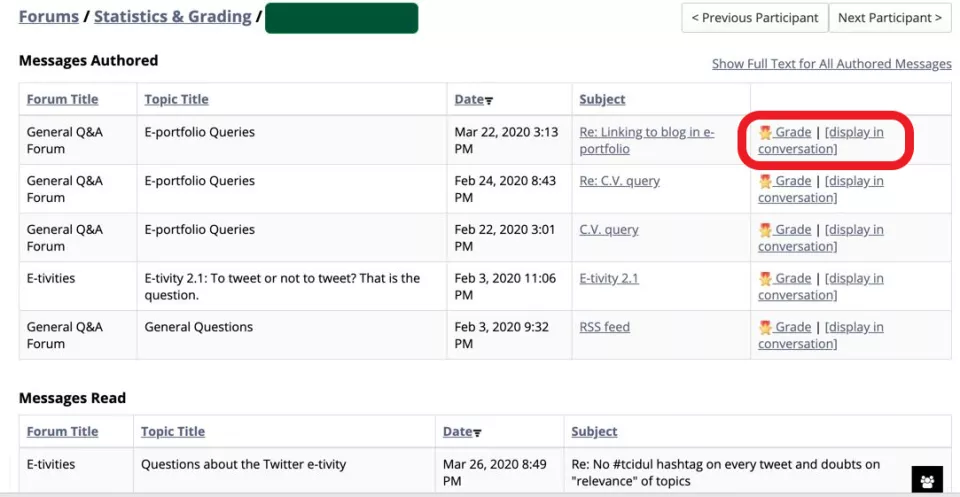

In addition, if you click on a particular student’s name (names have been obscured), you can view their messages in context (click display in conversation). You can also grade their postings in that space (see Figure 6).

Salmon (2004) suggests using qualitative and quantitative forum data to answer questions like:

- How many logged-on, read, and/ or contributed?

- Which topics did learners (choose to) engage in?

- Did those who failed to take part in forum-based activities achieve lower results than those who did?

- Were there requests for new forums topics, and if so, when and why?

- Did forums benefit those for whom they were intended? For example, did students who were shy in live classes become more vocal in asynchronous forums?

- Could you identify students who were struggling by analysing their forum contributions?

- Did students spend sufficient time on the most challenging forum topics?

These are just a selection of questions you might like to consider when assessing the nature of online participation.

3. Using Course Quality Review Rubrics

Course review rubrics can also be used to help with module or programme evaluation. Most of the rubrics cover similar topics such as course (module) orientation, tools, instructional design, content, interaction, and assessment.

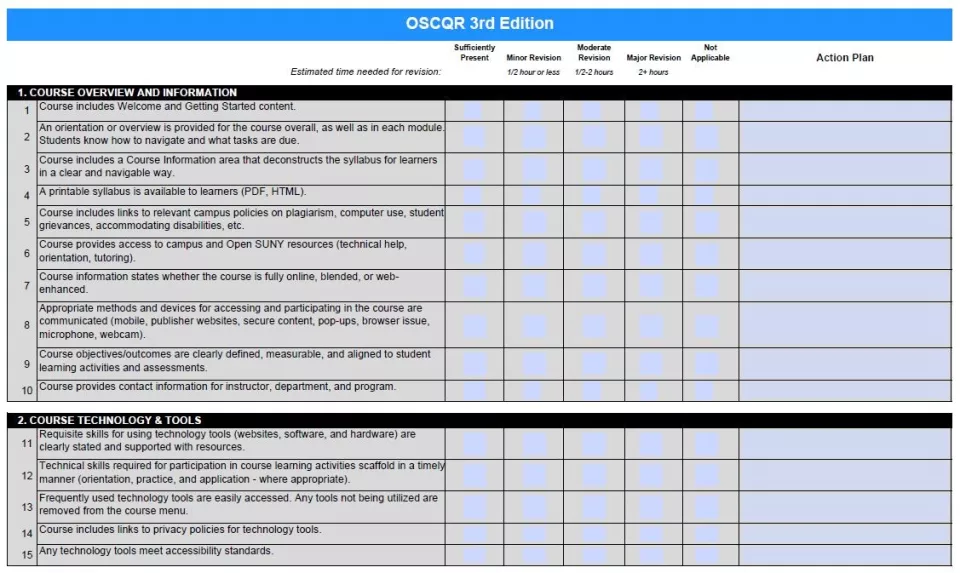

One such rubric—OSCQR—can be used formatively to facilitate effective course design and as a summative evaluation tool. The rubric is based on the Community of Inquiry (COI) framework (Garrison, Anderson, & Archer, 2000; Garrison, 2017) and incorporates important accessibility and universal design for learning (UDL) considerations.

OSCQR recommends adhering to a number of standards under the following headings:

- Course overview and information

- Course technology and tools

- Design and layout

- Content and activities

- Interaction

- Assessment and feedback

You can read about the standards here (click on ‘Explanations, Evidence, & Examples’ in the top menu) or download a scorecard here, which you can use to design your own modules (see Figure 7).

Conclusions

This article outlines three strategies to help you formatively and summatively evaluate your online module using VLE tools and other online resources.

About the Author

Dr. Darina Slattery is a Senior Lecturer in e-learning, instructional design and technical communication at UL. Her research interests include best practice in online education, professional development for online teachers, virtual teams, learning analytics, and online collaboration. She has been facilitating professional development initiatives for colleagues, in the form of DUO workshops, since 2014. She is the Project Lead for the UL VLE Review (phase 1) and an active member of the UL Learning Technology Forum (LTF). Her previous LTF blog article on ‘9 Tried-and-Trusted Strategies for Quality Online Delivery’ is available here.

References/Further Reading

Accessibility, instructional design, and universal design:

CAST (2018) Universal Design for Learning (UDL) Guidelines Version 2.2: https://udlguidelines.cast.org/

Slattery, D. M. (2020) Implementing Instructional Design Approaches to Inform your Online Teaching Strategies: https://www.facultyfocus.com/articles/online-education/online-course-design-and-preparation/implementing-instructional-design-approaches-to-inform-your-online-teaching-strategies/

World Wide Web Consortium (2021) How to Meet Web Content Accessibility Guidelines (WCAG) (Quick Reference): https://www.w3.org/WAI/WCAG21/quickref/

Course quality review rubrics:

SUNY Online Course Quality Review Rubric (OSCQR), 3rd edition (2018): https://oscqr.suny.edu/ and https://drive.google.com/file/d/0B_VwCrtRb6p9X2c5ZFl0cm5SQjg/view

Quality Matters Higher Education Rubric, 6th edition (2020): https://www.qualitymatters.org/sites/default/files/PDFs/StandardsfromtheQMHigherEducationRubric.pdf

University of Ottawa Blended Learning Course Quality Rubric (2017): https://tlss.uottawa.ca/site/files/docs/TLSS/blended_funding/2017/TLSSQARubric.pdf

Learning analytics:

Sakai learning analytics: https://www.teachingandlearning.ie/resource/learning-analytics-features-in-sakai-2/

Moodle learning analytics: https://www.teachingandlearning.ie/resource/learning-analytics-features-in-moodle-2/

UL Student Evaluation and Learning Analytics (StELA) project: https://www.ul.ie/quality/stela

Models and frameworks for online learning:

Garrison, D. R. (2017) E-Learning in the 21st Century: A Community of Inquiry Framework for Research and Practice, 3rd edition. New York: Routledge.

Garrison, D. R., Anderson, T. and Archer, W. (2000) ‘Critical Inquiry in a Text-Based Environment: Computer Conferencing in Higher Education’, The Internet and Higher Education, 2 (2-3), 87-105.

Salmon, G. (2004) E-moderating, 2nd edition. London: Routledge.